- Home

- Users & Science

- Scientific Documentation

- ESRF Highlights

- ESRF Highlights 2009

- Enabling Technologies

- Evaluating the usability of Grid computing for synchrotron science

Evaluating the usability of Grid computing for synchrotron science

Grid computing is a form of geographically dispersed, loosely coupled, and distributed computing to solve very large computational or data- intensive tasks [1]. The intriguing potential of Grid technology has led the ESRF to include a specific chapter in the Purple Book based on the EGEE gLite middleware applied to future data- intensive synchrotron science experiments. Since then, the FP7 grant ESRFUP has provided the funds to conduct a detailed technical feasibility study.

Specific hardware consisting of compute nodes, file servers and Grid middleware servers were installed at ESRF, SOLEIL, PSI, and DESY to act as Grid sites in the European Grid infrastructure EGEE [2].

Each of those Grid Sites provided the following Grid middleware services: one or more Computing Elements (CE), a Storage Element (SE) to provide access to files, a User Interface (UI), a job accounting server (MONBOX), and a site resource information server (BDII). The different components were hosted in a virtualised environment and distributed over a number of Citrix-Xen servers.

The four Grid Sites (ESRF, SOLEIL, PSI, DESY) formed the Virtual Organisation (VO) X-RAY. The VO was registered in the EGEE Grid and became operational once a number of core middleware components were added, consisting of the Workload Management Server (WMS) to handle and distribute the computing jobs over the infrastructure, a global file catalogue (LFC), as well as the Virtual Organization Management Service (VOMS) to manage users and their access rights.

The testbed served to validate various implementations of storage resource managers (dCache and DPM) [3], data transfers with protocols such as GridFTP [4], the handling of Grid certificates, and running of synchrotron science data analysis and modelling code, which had to be ported to the Grid beforehand. From the outset we did not only test purely technical aspects, but also the user friendliness of the gLite middleware suite, knowing that user friendliness is a very important aspect when considering the large diversity of the synchrotron user community.

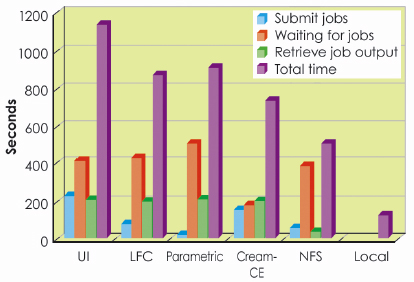

A typical data analysis application to correct spatial image distortions has been used among others to measure the performances within the X-RAY VO. Several cases have been tested ranging from a setup close to the classic definition of the Grid (the UI mode) to a setup close to a local cluster (the NFS mode). The outcome is summarised in Figure 152. Compared to running this application on a local computer, we notice a significant increase in computation time introduced by the various Grid infrastructure layers mainly due to the application data which have to be moved to and from the Computing Elements for processing.

|

|

Fig. 152: Execution times of the Spatial Distortion Program (SPD, 18 runs with 10 images each) in different Grid environments and in a local environment, showing the overhead introduced by the various Grid middleware layers. |

We found out that for most synchrotron science applications the EGEE Grid is not well adapted. The main reasons for this are:

- EGEE Grids are set up as a collection of independent nodes or services and their integration is not optimised. This means data need to be transferred between Grid data storage and the worker nodes every time they are needed. This causes a large overhead over public networks which causes data intensive applications to run slower on the Grid than locally.

- The EGEE Grid is not optimised for running short jobs which are data intensive for the reasons given above. Many of the applications used in reducing or analysing synchrotron science data are short and data intensive.

- The EGEE Grid is not optimised for fast communication between worker nodes required by applications which use MPI to communicate between the distributed applications. Many modelling applications used in synchrotron science use MPI for distributing the workload.

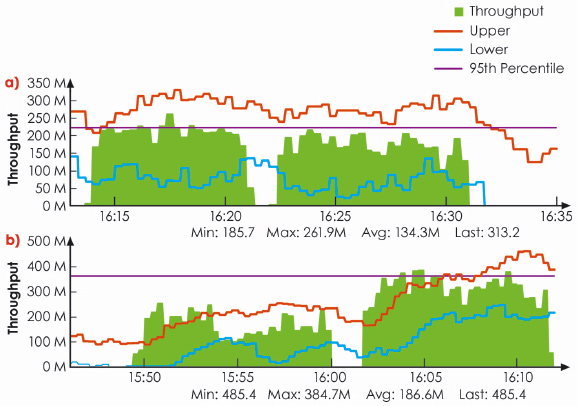

- Public networks are still slow or unreliable for transferring large data sets even with highly optimised Grid protocols like GridFTP. Hence, the Grid is still not an ideal solution for exporting users’ data or storing and sharing data via the Grid (Figure 153).

- Grid certificates provide a secure way to share resources with users. However they are difficult for users to understand and manage. This means they are only a solution if users really need them.

- The very concept of Virtual Organizations (VOMS) to manage user authentication was not perceived as a good model for the needs of a rather diverse community of researchers working at synchrotron installations. Typically, users asked for more fine grained security models like Access Control Lists (ACLs).

- However, the Grid can be a good fit for a certain type of application:

- The EGEE Grid is optimised for running parallel applications which do not need to access data, which do not generate large data sets, and which run between 1 hour and 24 hours. A small number of applications used for modelling synchrotron science data fit into this category. For this category the EGEE Grid is well suited. However, this concerns only a very small number of users.

|

|

Fig. 153: a) 10-channel GridFTP transfer session (in Mbits/s) over a 1Gbit network uplink. The first green block depicts outbound connections, whereas the second is incoming data. The data transfer was done between PSI and ESRF and represents a typical best case scenario. b) Single channel IPerf tests (in Mbits/s), a measure of the available throughput capacity of a network link relying on the Transmission Control Protocol (TCP), between PSI and ESRF. The outbound performance is very similar to GridFTP, whereas the inbound performance is significantly better. |

In view of the bad overall fit of the Grid for most typical synchrotron science applications, the fact that setting up and maintaining an EGEE Grid installation is very resource intensive, and finally the fact that synchrotron science users are not limited by the currently available computing resources, we think the EGEE Grid in its current implementation is not suited for synchrotron science.

References

[1] I. Foster et al., International J. Supercomputer Applications, 15(3), 2001.

[2] EGEE – Enabling Grids for E-sciencE, http://eu-egee.org.

[3] F. Donno et al., Journal of Physics: Conference Series, 119 (2008) 062028.

[4] GridFTP, http://www.ogf.org/documents/GFD.20.pdf

Authors

F. Calvelo-Vazquez, R. Dimper, A. Götz, C. Koerdt and E. Taurel.

ESRF